Most retail AI is still stuck in the lab. Unlocking its full value starts with scaling real-time inference across operations, experiences, and growth.

The Inflection Point: AI Is Not Failing, But It Is Just Not Scaling

Retailers have invested heavily in AI, yet much of it remains stalled at the proof-of-concept stage. Models are built, validated, and showcased, then rarely scaled. The ambition is there. So are the pilots. But the business impact? Still largely untapped.

Why? Because AI success in retail is not just about building models. It’s about making decisions at speed, in the real world, at every customer touchpoint. And that depends on one thing: inference.

Inference is where AI stops being theoretical and starts being useful. It’s the process of running live data through a trained AI model to make a prediction or solve a task. It’s the real-time engine that drives smarter search results, dynamic pricing, accurate fulfillment, and contextual personalization. In short, inference is the key to making AI operational.

In this blog, we will explore why the retail AI journey is stalling at deployment and how inference can unlock value at scale. From intelligent edge delivery to digital twins, we will discuss what it takes to move from isolated models to business-wide movement. And with NRF 2026 on the horizon, scaling inference is no longer optional; it’s the most urgent step for retailers aiming to future-proof operations, elevate customer experience, and turn AI into enterprise value.

The Retail Bottleneck: Why Most AI Is Still Stuck in the Lab

AI is not new to retail. Most large retailers today already have models for demand forecasting, fraud detection, and recommendation engines. The problem is not the lack of innovation but a lack of integration.

According to Incisiv’s 2025 Unified Commerce Benchmark, only 5% of retailers harness GenAI to elevate the self-service experience for their customers. Despite the many benefits of scaling AI across operations, many retailers remain stuck in testing or siloed use cases. And the reasons are all too familiar:

- Disconnected data and systems that block real-time execution, and an overreliance on dashboards.

- Traditional or legacy architecture that was not built for inference workloads.

- A mismatch between data science teams and the operational needs of the business.

Retailers often focus on training large models—an expensive, complex, and time-consuming process. But inference is the step that actually delivers value to the business, generating an output, in the moment, in response to a real-world signal.

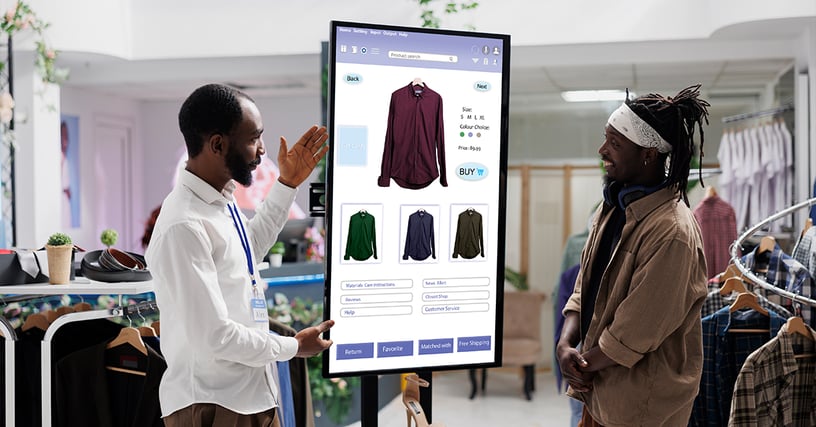

For example, a customer opens the app. Which product should they see first? That is inference. A shopper walks into a store with a loyalty ID. What offer do they get? Inference again. When inference does not scale, models stay stuck, and value never leaves the lab.

The Inference Gap: Models Are Trained, But Not Deployed

Inference is where AI translates into outcomes. Yet in most retail environments, the inference layer is either underbuilt or entirely missing. While model training happens in centralized environments, inference needs to happen where decisions are made (across stores, apps, warehouses, and customer touchpoints). And that introduces a host of architectural challenges:

- Latency and Scale: Real-time decisions can’t afford cloud roundtrips, especially when scaled across thousands of devices and regions.

- Integration: There is a need for tight coupling between core systems, including point of sale (POS), order management (OMS), customer relationship management (CRM), and digital frontends.

- Governance: Retailers must guard against model drift, ensure version control, and build in bias mitigation, with safeguards for fairness and accountability.

Incisiv’s report, ‘From Content to Experience: How AI Is Shaping the Future of Marketing’, reveals how companies want to prioritize AI adoption and believe it will deliver results rapidly. Yet, only 12% are fully prepared for large-scale AI adoption. Most still rely on batch-based models and static rule systems that struggle to keep up with fast-moving demand, changing shopper intent, and fragmented experiences. Additionally, 76% are overwhelmed with the volume and complexity of AI tools, and 63% say complexity in integrating AI with existing systems and workflows is one of their biggest challenges.

What is missing is an infrastructure layer that enables inference to happen continuously, turning static models into living systems that learn, adapt, and act at speed.

Signals and Actions: Making Retail AI Work in the Real World

Leading retailers are now experimenting with digital twins—virtual models of stores, supply chains, and shopper journeys—that help simulate outcomes before they happen. When paired with scalable inference, these digital twins allow retailers to test promotions, optimize labor, or fine-tune inventory strategies in a risk-free environment. Real-time feedback loops between the physical and digital world unlock smarter, faster decisions.

Thus, inference transforms raw signals into action; a location ping, a product view, a scanned barcode, all become decision triggers when inference is built into the stack.

Let’s explore how retailers can drive business value and achieve tangible results.

Search that gets smarter in real time

A shopper types in “running shoes.” Traditional keyword matching falls flat. But with inference-powered semantic search, AI can process intent, past behavior, size preferences, and inventory availability to deliver a personalized result set in milliseconds.

Fulfillment that adapts mid-journey

A customer places an order for same-day delivery. Real-time inference recalculates availability, routes to the optimal node, and adjusts based on traffic, inventory, or order modifications—no manual intervention required.

Loyalty that reacts and rewards

Incisiv’s report reveals that 35% of companies see customer personalization and segmentation as being fully AI-driven in the future. Instead of generic offers, through AI, retailers can now infer churn risk, lifetime value, and next-best engagement in real time, thereby triggering contextual nudges across email, app, or in-store channels.

When inference is embedded across the stack, every touchpoint becomes intelligent, and every action, optimized. But scaling inference requires infrastructure to run models continuously, securely, and at low latency.

From Models to Momentum: Moving AI Beyond Pilots

Retailers do not lack AI models. They lack movement. Movement between teams. Movement between systems. Movement between insight and action.

This is where scalable inference infrastructure becomes the backbone of real-time AI. It bridges trained models and real-world execution, turning strategy into action. Retailers leading in inference maturity are building pipelines to operationalize the AI models they already have, and rethinking three core enablers:

- Inference must run closer to the edge (in-store, in-app, in-distribution nodes), and deployment must be standardized across platforms to avoid model silos.

- AI must be embedded into the daily workflows of planners, merchandisers, marketers, and associates.

- Visibility, trust, and oversight can’t be afterthoughts; they must be built in from the start.

Inference infrastructure shifts AI from a lab experiment to an enterprise advantage. This requires treating AI like an innovative ecosystem. Let’s look at how retailers can do just that, bridge the gap, and scale for real impact.

Closing the Loop: Your Inference-First Guide to Scalable AI

To move from pilot AI to performant AI, let’s discuss what matters the most.

Design for distributed inference

Shift from centralized-only compute to edge and hybrid models that bring decision-making closer to where data is generated.

Treat data as fuel, not just storage

Inference needs real-time data feeds that are unified, clean, and continuously refreshed, from inventory and pricing to shopper behavior and external signals.

Build observability into your AI stack

Track drift, latency, and model performance across inference points. If you can’t see it, you can’t scale it.

The bottom line is that AI inference is the front-line enabler of real-time retail. The question is not whether you have AI but whether your AI can move at the speed of retail. Retailers who win this game will outlearn, outmaneuver, and outperform the rest.